Building Hospital Price Transparency Chatbot

with OpenAI, LangChain and Chainlit

[AI Seminar] College of Intelligent Computing, CGU2024-02-20 @ Taoyuan City, Taiwan By Jazz Yao-Tsung Wang Director of Engineering, Innova Solutions Chairman, Taiwan Data Engineering Association |

|

Agenda

|

|

Hospital Price Transparency

Effective on 2021-01-01

Civil monetary penalty (CMP) notices issued by CMS

Civil monetary penalty (CMP) = 民事罰款

Source: https://www.cms.gov/hospital-price-transparency/enforcement-actions

Shop site developed by Taipei DXP SBP team

Patient can search Procedure Price based on Payer Name and Medical Network

Machine Readable Files (MRFs)

Hospitals need to provide their link to MRFs

Source: https://shop.baconcountyhospital.com/pt-machinereadable.html

Agenda

|

|

[ WHY ] Will AI Chatbot be the "new" UI?

Call Center

Did you call Help Desk before?

Image Source: "Telework Guy",by j4p4n, OpenClipArt, 2020-06-05

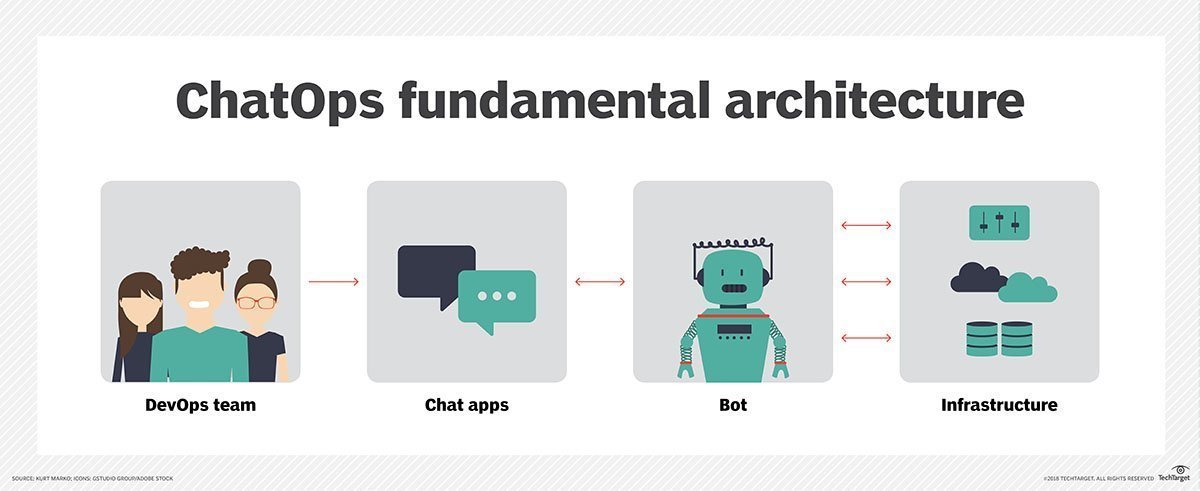

Slack Chatbot

ChatOps

Source: "Three ChatOps examples demonstrate DevOps efficiency", 2018-02-05

LINE Chatbot

Source: "LINE Bot Designer"

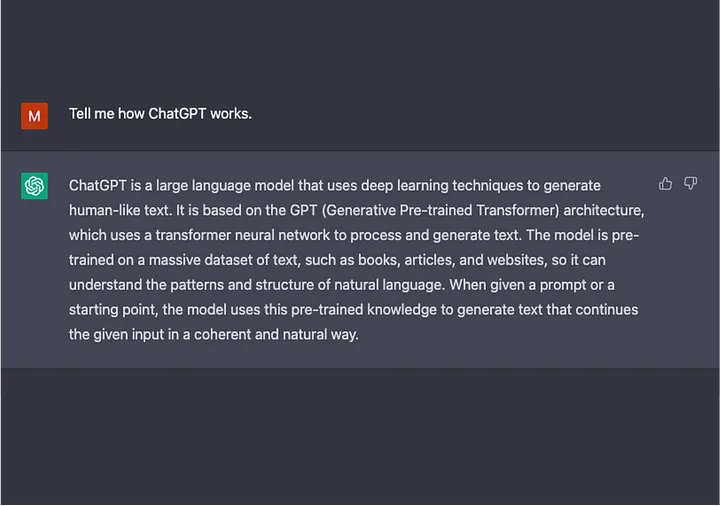

ChatGPT

NLP (Natural language processing)

Source: "How ChatGPT Works: The Model Behind The Bot", 2023-01-31

[ WHY ] Multilingual Support?

[ Pain ] Complex Architecture

( Note: I removed confidential diagram shown here )

Source: Reverse Engineering PlantUML diagram by Jazz Wang

[ Gain ] Needs to support multiple langauges

ChatGPT supports 95 languages

Benefits Of Multilingual ChatGPT

Source: "List of languages supported by ChatGPT",

Botpress Community, 2023-03-23

Final Result

Demo site: http://chat.3du.me:8000

Agenda

|

|

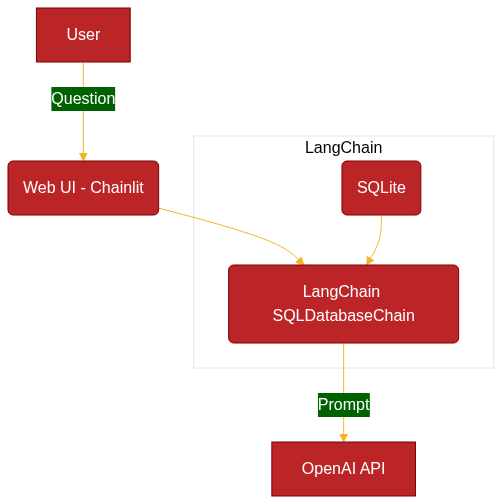

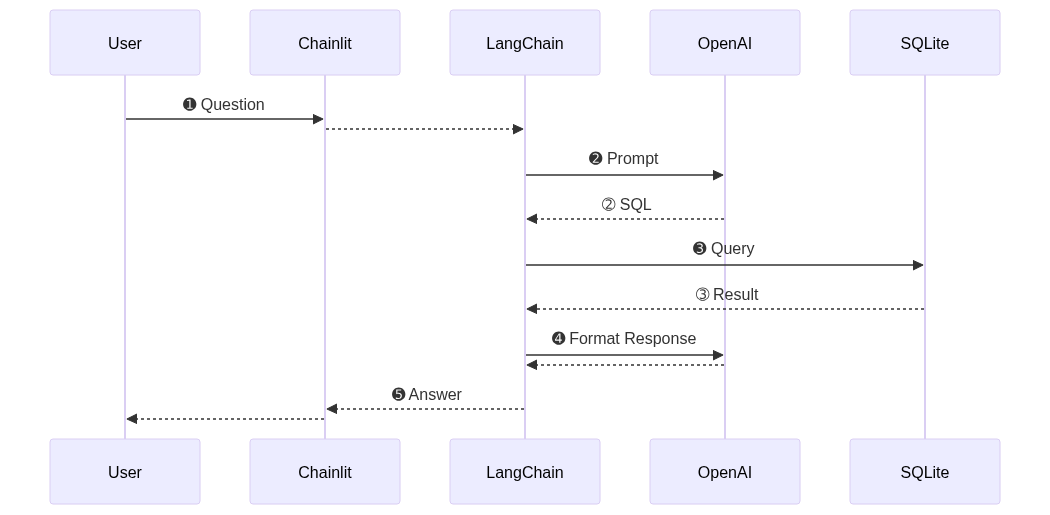

[ HOW ] High-level Architecture

[ HOW ] High-level Architecture

[ HOW ] POC Implementation

PS. I tried to leverage local GPT4All-J LLM instead of OpenAI GPT-3.5-Turbo LLM

|

|

Agenda

|

|

[Deep Dive] OpenAI API

Most poeple use ChatGPT (free) / ChatGPT Plus (paid).

By subscribing OpenAI API, you can create application programatically.

Source: https://platform.openai.com/apps

[Deep Dive] OpenAI API Capability

[Deep Dive] OpenAI API Keys

[Deep Dive] OpenAI API Usage

[Deep Dive] OpenAI Billing Auto Recharge

When you don't want to do experiment for a long term,

you canCancel Planto reduce cost.

[Deep Dive] LangChain - Introduction

LangChainis a framework for developing applications

powered bylanguage models.

LangChainenables applications that are:- Data-aware: connect a language model to other sources of data

- Agentic: allow a language model to interact with its environment

- The main value props of

LangChainare:- Components: abstractions for working with language models.

- Off-the-shelf chains: components for accomplishing specific higher-level tasks

[Deep Dive] LangChain - Modules

- Model I/O : Interface with language models

- Data connection : Interface with application-specific data

- Chains : Construct sequences of calls

- Agents : Let chains choose which tools to use given high-level directives

- Memory : Persist application state between runs of a chain

- Callbacks : Log and stream intermediate steps of any chain

[Deep Dive] LangChain - Model I/O

- Prompts: Templatize, dynamically select, and manage model inputs

- Language models: Make calls to language models through common interfaces

- Output parsers: Extract information from model outputs

Source: LangChain Document - Model I/O

[Deep Dive] LangChain - Data Connection

- Document loaders: Load documents from many different sources

- Document transformers: Split, convert into Q&A format, drop redundant documents

- Text embedding models: Turn unstructured text into vector

- Vector stores: Store and search over embedded data

- Retrievers: Query your data

[Deep Dive] LangChain - Chains

Chain = a sequence of calls to components, which can include other chains.

from langchain.llms import OpenAI

from langchain.prompts import PromptTemplate

llm = OpenAI(temperature=0.9)

prompt = PromptTemplate(

input_variables=["product"],

template="What is a good name for a company that makes {product}?",

)

Source: LangChain Document - Chains

[Deep Dive] LangChain - Memory

By default,

ChainsandAgentsare stateless

LangChain provides memory components in two forms:

- helper utilities for managing and manipulating previous chat messages.

- ways to incorporate these utilities into chains.

Source: LangChain Document - Memory

[Deep Dive] LangChain - Agents

The core idea of agents is to use an LLM to choose a sequence of actions to take.

In

chains, a sequence of actions is hardcoded (in code).

Inagents, a language model is used as a reasoning engine.

Source: LangChain Document - Agents

- Agent Types:

- Zero-shot ReAct <-- We use this for POC

- Structured input ReAct

- OpenAI Functions

- Conversational

- Self ask with search

- ReAct document store

Source: LangChain Document - Agent types

[Deep Dive] Chainlit

Chainlit is an open-source Python package

to build production ready Conversational AI.

Key features

- Build fast: Integrate seamlessly with an existing code base or start from scratch in minutes

- Copilot: Embed your chainlit app as a Software Copilot

- Data persistence: Collect, monitor and analyze data from your users

- Visualize multi-steps reasoning: Understand the intermediary steps that produced an output at a glance

- Iterate on prompts: Deep dive into prompts in the Prompt Playground to understand where things went wrong and iterate

[Deep Dive] Chainlit integration with LangChain

There are reference example code to integrate Chainlit with LangChain

Agenda

|

|

[Learnt] Limitations of LangChain and LocalAI

Lesson #1: Prompt Observability

with Chainlit, you can see the

promptcreated by LangChain

and theresponsefrom OpenAI GPT-3.5/4 LLM

Lesson #2: GPT4All-J can't code like GPT-3.5-Turbo

Question: which is better?

Multimodal LLMvsCode LLM?

- TODO: explore

Meta's Code Llama-2LLM

Source: https://huggingface.co/codellama

Lesson #3: Impact of Database Schema

What if LLM can't understand the meaning of Schema?

[Learnt] Chatlit Local Cache - SQLite

Chainlit can store user's question into SQLite.

It helps to reduce the cost of OpenAI API usages.

You can also use it to analyze user's question and answers saved.

Agenda

|

|

[Future] Serverelss Architecture

- Serverless Architecture: Use AWS Lambda instead of AWS EC2

- reduce operation cost

Source: How to Create a FREE Custom Domain Name for Your Lambda URL - A Step by Step Tutorial

[Future] Different RAG implimentation

Retrieval-Augmented Generation (RAG)

Source: RAG Cheatsheet V2.0 🚀, Steve Nouri, 2024-02-18

[Future] Interacting with APIs with LangChain

- Common Enterprise Software InfoSec Pratice:

Application (backend API) subnet is different from database subnet.

(Data Security) - You can share OpenAPI (Swagger contract) with LangChain.

It helps to reduce internal data leaking. (Data Privacy)

sharing

Interfaceinstead ofData

Q & A

Learn by

Doing

Building Hospital Price Transparency Chatbot |

|